The comparison of clinical measurement methods is a fundamental component of medical research and diagnostic validation. This process is particularly relevant when introducing novel modalities or devices intended to assess the same physiological parameters as established techniques. Common examples include the measurement of cardiac output, ejection fraction, or ventricular volumes using thermodilution catheters, magnetic resonance imaging (MRI), echocardiography, or pulse contour analysis. The primary objective of these comparisons is to determine whether the alternative method produces measurements sufficiently consistent with those obtained using the reference method to justify clinical interchangeability. A range of statistical approaches is employed in this context, each providing distinct insights into the nature of the association and level of agreement between measurement methods.

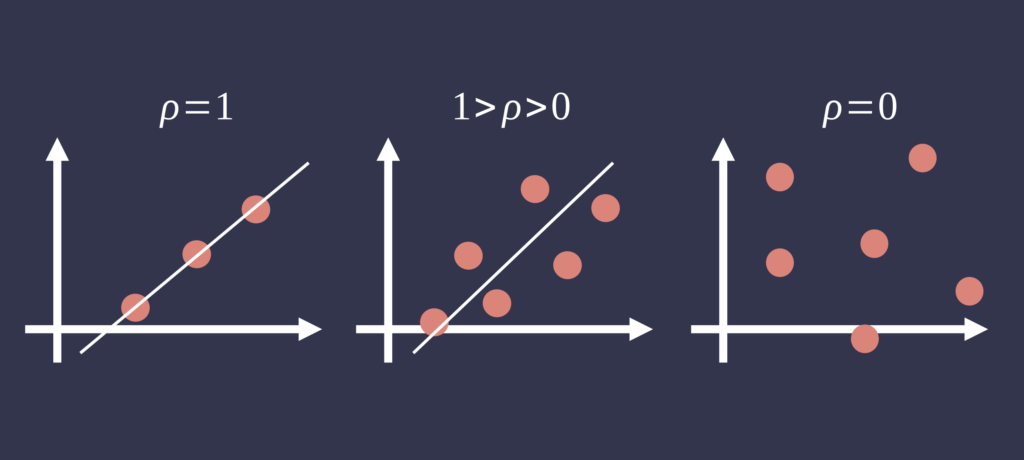

Correlation analysis, typically using Pearson’s correlation coefficient (r), is frequently applied to assess the strength of the linear association between two sets of continuous measurements. While a high correlation coefficient may indicate that both methods track physiological changes similarly, it does not imply agreement in absolute values. Systematic differences between methods can persist despite strong correlation, rendering this metric insufficient for validating measurement equivalence [1].

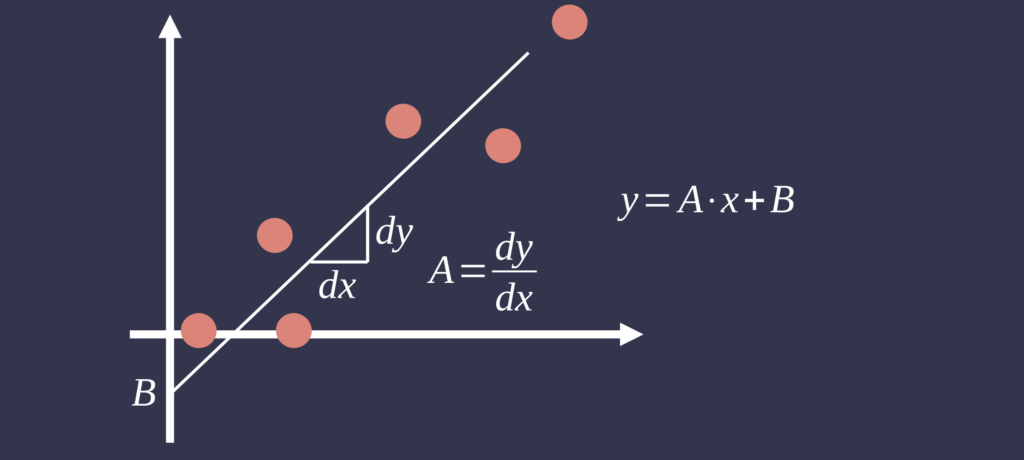

Linear regression analysis extends correlation by quantifying the linear relationship between measurement methods, identifying fixed and proportional biases. When a reference method is available, it is typically designated as the independent variable. The slope and intercept of the regression model can reveal systematic deviations between methods. However, ordinary least squares regression assumes the independent variable is measured without error—a condition rarely met in clinical measurement studies. Consequently, alternative techniques such as Deming regression may be more appropriate when both methods exhibit measurement error [4].

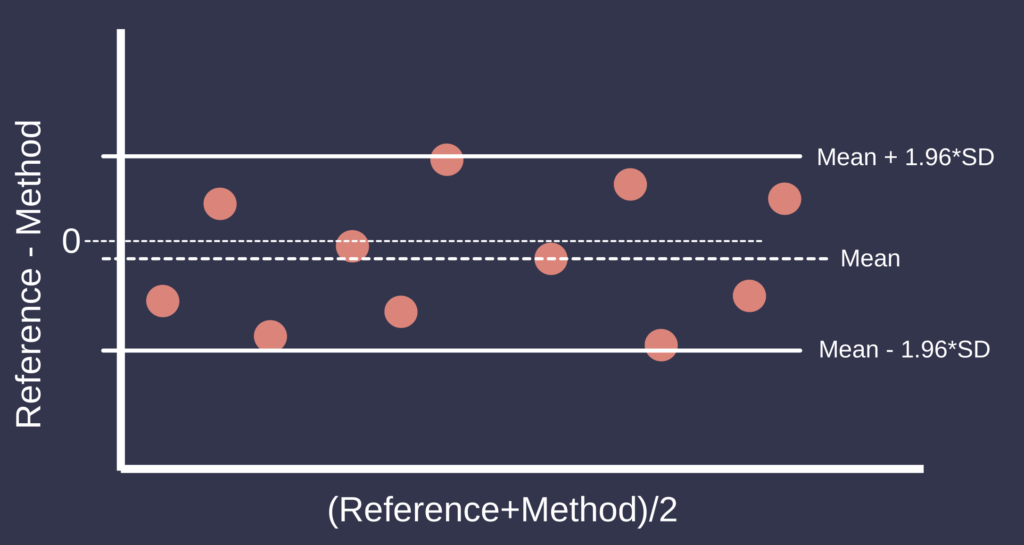

To assess agreement directly, Bland–Altman analysis is widely regarded as the standard approach. This method involves plotting the difference between paired measurements against their mean, thereby enabling the evaluation of bias and the limits of agreement (LoA), defined as the mean difference ±1.96 standard deviations. This framework facilitates the identification of systematic bias, heteroscedasticity, and outliers. The interpretation of Bland–Altman analysis depends on the clinical context and the definition of acceptable limits of agreement [2].

For specific applications such as cardiac output monitoring, Critchley and Critchley proposed the use of percentage error derived from Bland–Altman analysis to assess the clinical acceptability of a new measurement method. The percentage error is calculated as 1.96 times the standard deviation of the differences between the methods, divided by the mean cardiac output, and expressed as a percentage. A percentage error of less than 30% is generally considered acceptable. This threshold is derived from the assumption that the reference method has an inherent error of approximately 20%, and that an acceptable total error should not exceed the combined error from both the reference and the test method. Assuming the errors are independent and normally distributed, the total allowable error is calculated as the square root of the sum of the squared individual errors: √(20² + 20²) ≈ 28.3%, which is conventionally rounded to 30%. This approach provides a standardized criterion for determining whether a novel device or technique is suitable for clinical use in hemodynamic monitoring [5].

Lin’s concordance correlation coefficient (CCC) offers a quantitative measure of agreement that incorporates both precision and accuracy. The CCC evaluates the degree to which observed data deviate from the line of perfect concordance (i.e., the identity line). It penalizes both random variation and systematic bias, producing a coefficient that approaches unity only when both are minimal. While CCC provides a concise summary measure of agreement, it is most informative when used alongside Bland–Altman analysis and graphical methods [3].

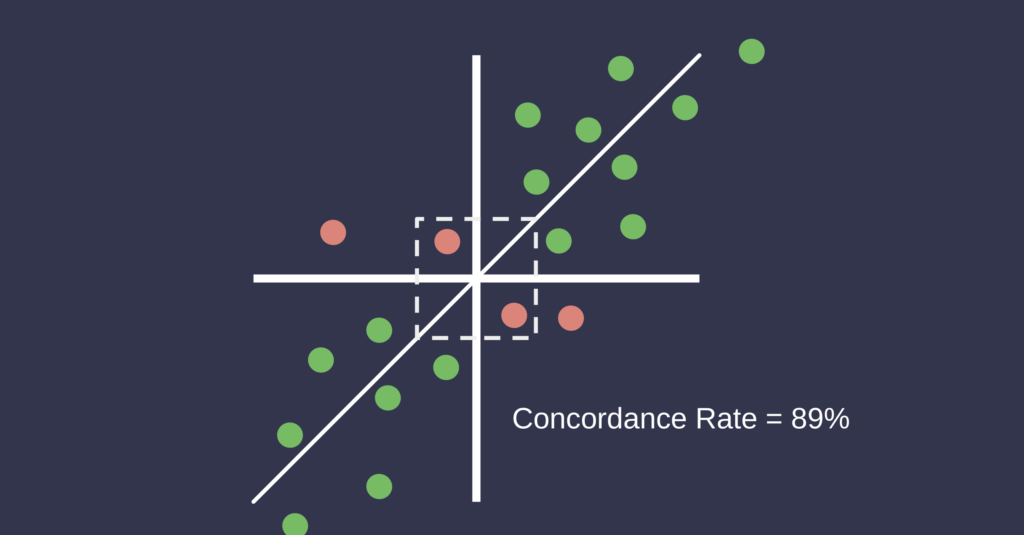

In addition to static agreement metrics, the assessment of dynamic measurement trends is important, particularly in the context of hemodynamic monitoring. Four-quadrant concordance analysis is commonly used to evaluate the agreement of directional changes between two methods over time. This method involves plotting the changes (delta values) from one time point to the next for both methods on Cartesian axes, with each axis representing the change in measurement for one method. The data points fall into four quadrants, and concordance is defined as the proportion of points that fall into the upper right and lower left quadrants, where both methods show change in the same direction. An exclusion zone is typically applied around the origin to disregard small changes considered within the noise of the measurement system. A concordance rate above 90% is generally considered indicative of good trending agreement. This method is especially relevant in critical care and perioperative settings where the detection of reliable directional changes is more clinically relevant than exact agreement in absolute values [6].

The selection and interpretation of statistical methods depend on whether a gold standard measurement exists. When a reference method is available, the analysis focuses on assessing the accuracy and precision of the alternative method relative to this standard. In the absence of a gold standard, both methods are assumed to have measurement error, and agreement must be interpreted symmetrically. In such cases, Bland–Altman analysis and CCC are particularly useful for evaluating interchangeability without assuming one method represents the true value.

In summary, the evaluation of clinical measurement methods necessitates a multifaceted statistical approach. Correlation and regression analyses are useful for characterizing systematic relationships and identifying bias. Bland–Altman analysis provides a robust framework for assessing agreement, while Lin’s CCC offers an aggregate measure of concordance. The percentage error method, particularly in the context of cardiac output validation, introduces a clinically interpretable criterion for acceptability. Four-quadrant concordance analysis further enhances the evaluation by determining the agreement of directional changes over time. These complementary methods support rigorous evaluation of whether a novel measurement technique may be adopted in place of an existing standard.

References

- Altman, D. G., & Bland, J. M. (1983). Measurement in medicine: the analysis of method comparison studies. The Statistician, 32(3), 307–317.

- Bland, J. M., & Altman, D. G. (1986). Statistical methods for assessing agreement between two methods of clinical measurement. The Lancet, 327(8476), 307–310.

- Lin, L. I. (1989). A concordance correlation coefficient to evaluate reproducibility. Biometrics, 45(1), 255–268.

- Linnet, K. (1993). Evaluation of regression procedures for methods comparison studies. Clinical Chemistry, 39(3), 424–432.

- Critchley, L. A., & Critchley, J. A. (1999). A meta-analysis of studies using bias and precision statistics to compare cardiac output measurement techniques. Journal of Clinical Monitoring and Computing, 15(2), 85–91.

- Critchley, L. A., Lee, A., & Ho, A. M. H. (2010). A critical review of the ability of continuous cardiac output monitors to measure trends in cardiac output. Anesthesia & Analgesia, 111(5), 1180–1192.